Tutorial 4: Reconstructing Past Changes in Atmospheric Climate#

Week 1, Day 4, Paleoclimate

Content creators: Sloane Garelick

Content reviewers: Yosmely Bermúdez, Dionessa Biton, Katrina Dobson, Maria Gonzalez, Will Gregory, Nahid Hasan, Paul Heubel, Sherry Mi, Beatriz Cosenza Muralles, Brodie Pearson, Jenna Pearson, Chi Zhang, Ohad Zivan

Content editors: Yosmely Bermúdez, Paul Heubel, Zahra Khodakaramimaghsoud, Jenna Pearson, Agustina Pesce, Chi Zhang, Ohad Zivan

Production editors: Wesley Banfield, Paul Heubel, Jenna Pearson, Konstantine Tsafatinos, Chi Zhang, Ohad Zivan

Our 2024 Sponsors: CMIP, NFDI4Earth

Tutorial Objectives#

Estimated timing of tutorial: 20 minutes

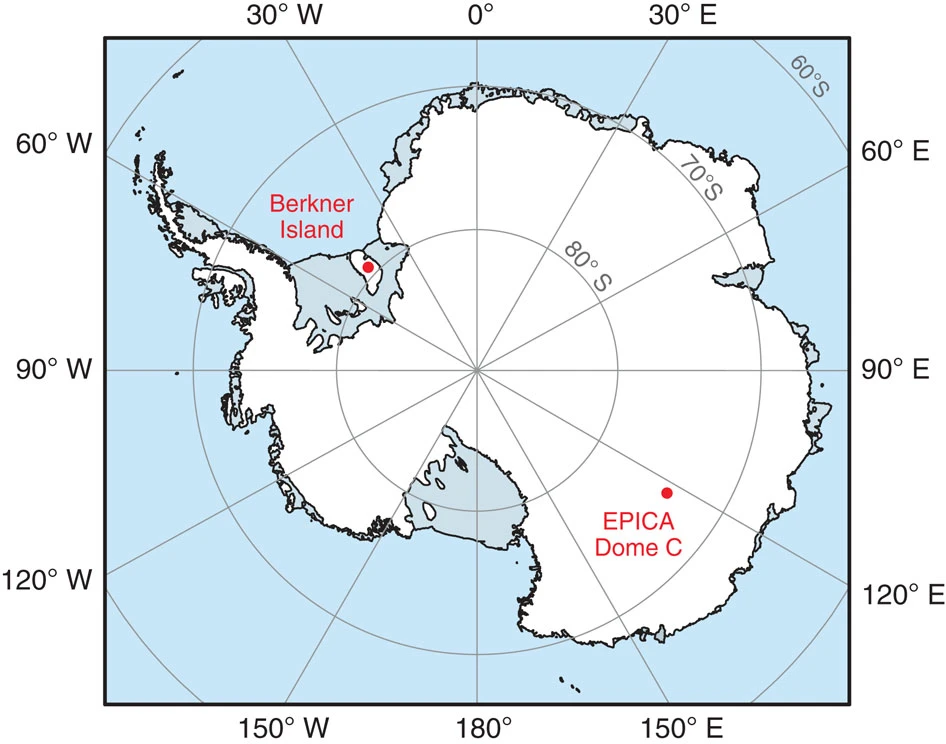

In this tutorial, we’ll analyze δD and atmospheric CO2 data from the EPICA Dome C ice core. Recall from the video that δD and δ18O measurements on ice cores record past changes in temperature and that measurements of CO2 trapped in ice cores can be used for reconstructing past changes in Earth’s atmospheric composition.

By the end of this tutorial you will be able to:

Plot δD and CO2 records from the EPICA Dome C ice core

Assess changes in temperature and atmospheric greenhouse gas concentration over the past 800,000 years

Setup#

# installations ( uncomment and run this cell ONLY when using google colab or kaggle )

# !pip install pyleoclim

# imports

import pandas as pd

import matplotlib.pyplot as plt

import pooch

import os

import tempfile

import pyleoclim as pyleo

Install and import feedback gadget#

Show code cell source

# @title Install and import feedback gadget

!pip3 install vibecheck datatops --quiet

from vibecheck import DatatopsContentReviewContainer

def content_review(notebook_section: str):

return DatatopsContentReviewContainer(

"", # No text prompt

notebook_section,

{

"url": "https://pmyvdlilci.execute-api.us-east-1.amazonaws.com/klab",

"name": "comptools_4clim",

"user_key": "l5jpxuee",

},

).render()

feedback_prefix = "W1D4_T4"

Figure Settings#

Show code cell source

# @title Figure Settings

import ipywidgets as widgets # interactive display

%config InlineBackend.figure_format = 'retina'

plt.style.use(

"https://raw.githubusercontent.com/neuromatch/climate-course-content/main/cma.mplstyle"

)

Helper functions#

Show code cell source

# @title Helper functions

def pooch_load(filelocation=None, filename=None, processor=None):

shared_location = "/home/jovyan/shared/Data/tutorials/W1D4_Paleoclimate" # this is different for each day

user_temp_cache = tempfile.gettempdir()

if os.path.exists(os.path.join(shared_location, filename)):

file = os.path.join(shared_location, filename)

else:

file = pooch.retrieve(

filelocation,

known_hash=None,

fname=os.path.join(user_temp_cache, filename),

processor=processor,

)

return file

Video 1: Atmospheric Climate Proxies#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Atmospheric_Climate_Proxies_Video")

If you want to download the slides: https://osf.io/download/szyhp/

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Atmospheric_Climate_Proxies_Slides")

Section 1: Exploring past variations in atmospheric CO2#

As we learned in the video, paleoclimatologists can reconstruct past changes in atmospheric composition by measuring gases trapped in layers of ice from ice cores retrieved from polar regions and high-elevation mountain glaciers. We’ll specifically be focusing on paleoclimate records produced from the EPICA Dome C ice core from Antarctica.

Credit: Conway et al 2015, Nature Communications

Let’s start by downloading the data for the composite CO2 record for EPICA Dome C in Antarctica:

# download the data using the url

filename_antarctica2015 = "antarctica2015co2composite.txt"

url_antarctica2015 = "https://www.ncei.noaa.gov/pub/data/paleo/icecore/antarctica/antarctica2015co2composite.txt"

data_path = pooch_load(

filelocation=url_antarctica2015, filename=filename_antarctica2015

) # open the file

co2df = pd.read_csv(data_path, skiprows=137, sep="\t")

co2df.head()

---------------------------------------------------------------------------

timeout Traceback (most recent call last)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:468, in HTTPConnectionPool._make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

464 except BaseException as e:

465 # Remove the TypeError from the exception chain in

466 # Python 3 (including for exceptions like SystemExit).

467 # Otherwise it looks like a bug in the code.

--> 468 six.raise_from(e, None)

469 except (SocketTimeout, BaseSSLError, SocketError) as e:

File <string>:3, in raise_from(value, from_value)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:463, in HTTPConnectionPool._make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

462 try:

--> 463 httplib_response = conn.getresponse()

464 except BaseException as e:

465 # Remove the TypeError from the exception chain in

466 # Python 3 (including for exceptions like SystemExit).

467 # Otherwise it looks like a bug in the code.

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/http/client.py:1377, in HTTPConnection.getresponse(self)

1376 try:

-> 1377 response.begin()

1378 except ConnectionError:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/http/client.py:320, in HTTPResponse.begin(self)

319 while True:

--> 320 version, status, reason = self._read_status()

321 if status != CONTINUE:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/http/client.py:281, in HTTPResponse._read_status(self)

280 def _read_status(self):

--> 281 line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

282 if len(line) > _MAXLINE:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/socket.py:716, in SocketIO.readinto(self, b)

715 try:

--> 716 return self._sock.recv_into(b)

717 except timeout:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/ssl.py:1275, in SSLSocket.recv_into(self, buffer, nbytes, flags)

1272 raise ValueError(

1273 "non-zero flags not allowed in calls to recv_into() on %s" %

1274 self.__class__)

-> 1275 return self.read(nbytes, buffer)

1276 else:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/ssl.py:1133, in SSLSocket.read(self, len, buffer)

1132 if buffer is not None:

-> 1133 return self._sslobj.read(len, buffer)

1134 else:

timeout: The read operation timed out

During handling of the above exception, another exception occurred:

ReadTimeoutError Traceback (most recent call last)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/adapters.py:667, in HTTPAdapter.send(self, request, stream, timeout, verify, cert, proxies)

666 try:

--> 667 resp = conn.urlopen(

668 method=request.method,

669 url=url,

670 body=request.body,

671 headers=request.headers,

672 redirect=False,

673 assert_same_host=False,

674 preload_content=False,

675 decode_content=False,

676 retries=self.max_retries,

677 timeout=timeout,

678 chunked=chunked,

679 )

681 except (ProtocolError, OSError) as err:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:802, in HTTPConnectionPool.urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, **response_kw)

800 e = ProtocolError("Connection aborted.", e)

--> 802 retries = retries.increment(

803 method, url, error=e, _pool=self, _stacktrace=sys.exc_info()[2]

804 )

805 retries.sleep()

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/util/retry.py:552, in Retry.increment(self, method, url, response, error, _pool, _stacktrace)

551 if read is False or not self._is_method_retryable(method):

--> 552 raise six.reraise(type(error), error, _stacktrace)

553 elif read is not None:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/packages/six.py:770, in reraise(tp, value, tb)

769 raise value.with_traceback(tb)

--> 770 raise value

771 finally:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:716, in HTTPConnectionPool.urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, **response_kw)

715 # Make the request on the httplib connection object.

--> 716 httplib_response = self._make_request(

717 conn,

718 method,

719 url,

720 timeout=timeout_obj,

721 body=body,

722 headers=headers,

723 chunked=chunked,

724 )

726 # If we're going to release the connection in ``finally:``, then

727 # the response doesn't need to know about the connection. Otherwise

728 # it will also try to release it and we'll have a double-release

729 # mess.

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:470, in HTTPConnectionPool._make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

469 except (SocketTimeout, BaseSSLError, SocketError) as e:

--> 470 self._raise_timeout(err=e, url=url, timeout_value=read_timeout)

471 raise

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:358, in HTTPConnectionPool._raise_timeout(self, err, url, timeout_value)

357 if isinstance(err, SocketTimeout):

--> 358 raise ReadTimeoutError(

359 self, url, "Read timed out. (read timeout=%s)" % timeout_value

360 )

362 # See the above comment about EAGAIN in Python 3. In Python 2 we have

363 # to specifically catch it and throw the timeout error

ReadTimeoutError: HTTPSConnectionPool(host='www.ncei.noaa.gov', port=443): Read timed out. (read timeout=30)

During handling of the above exception, another exception occurred:

ReadTimeout Traceback (most recent call last)

Cell In[10], line 5

2 filename_antarctica2015 = "antarctica2015co2composite.txt"

3 url_antarctica2015 = "https://www.ncei.noaa.gov/pub/data/paleo/icecore/antarctica/antarctica2015co2composite.txt"

----> 5 data_path = pooch_load(

6 filelocation=url_antarctica2015, filename=filename_antarctica2015

7 ) # open the file

9 co2df = pd.read_csv(data_path, skiprows=137, sep="\t")

11 co2df.head()

Cell In[5], line 10, in pooch_load(filelocation, filename, processor)

8 file = os.path.join(shared_location, filename)

9 else:

---> 10 file = pooch.retrieve(

11 filelocation,

12 known_hash=None,

13 fname=os.path.join(user_temp_cache, filename),

14 processor=processor,

15 )

17 return file

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/pooch/core.py:239, in retrieve(url, known_hash, fname, path, processor, downloader, progressbar)

236 if downloader is None:

237 downloader = choose_downloader(url, progressbar=progressbar)

--> 239 stream_download(url, full_path, known_hash, downloader, pooch=None)

241 if known_hash is None:

242 get_logger().info(

243 "SHA256 hash of downloaded file: %s\n"

244 "Use this value as the 'known_hash' argument of 'pooch.retrieve'"

(...)

247 file_hash(str(full_path)),

248 )

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/pooch/core.py:807, in stream_download(url, fname, known_hash, downloader, pooch, retry_if_failed)

803 try:

804 # Stream the file to a temporary so that we can safely check its

805 # hash before overwriting the original.

806 with temporary_file(path=str(fname.parent)) as tmp:

--> 807 downloader(url, tmp, pooch)

808 hash_matches(tmp, known_hash, strict=True, source=str(fname.name))

809 shutil.move(tmp, str(fname))

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/pooch/downloaders.py:220, in HTTPDownloader.__call__(self, url, output_file, pooch, check_only)

218 # pylint: enable=consider-using-with

219 try:

--> 220 response = requests.get(url, timeout=timeout, **kwargs)

221 response.raise_for_status()

222 content = response.iter_content(chunk_size=self.chunk_size)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/api.py:73, in get(url, params, **kwargs)

62 def get(url, params=None, **kwargs):

63 r"""Sends a GET request.

64

65 :param url: URL for the new :class:`Request` object.

(...)

70 :rtype: requests.Response

71 """

---> 73 return request("get", url, params=params, **kwargs)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/api.py:59, in request(method, url, **kwargs)

55 # By using the 'with' statement we are sure the session is closed, thus we

56 # avoid leaving sockets open which can trigger a ResourceWarning in some

57 # cases, and look like a memory leak in others.

58 with sessions.Session() as session:

---> 59 return session.request(method=method, url=url, **kwargs)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/sessions.py:589, in Session.request(self, method, url, params, data, headers, cookies, files, auth, timeout, allow_redirects, proxies, hooks, stream, verify, cert, json)

584 send_kwargs = {

585 "timeout": timeout,

586 "allow_redirects": allow_redirects,

587 }

588 send_kwargs.update(settings)

--> 589 resp = self.send(prep, **send_kwargs)

591 return resp

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/sessions.py:703, in Session.send(self, request, **kwargs)

700 start = preferred_clock()

702 # Send the request

--> 703 r = adapter.send(request, **kwargs)

705 # Total elapsed time of the request (approximately)

706 elapsed = preferred_clock() - start

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/adapters.py:713, in HTTPAdapter.send(self, request, stream, timeout, verify, cert, proxies)

711 raise SSLError(e, request=request)

712 elif isinstance(e, ReadTimeoutError):

--> 713 raise ReadTimeout(e, request=request)

714 elif isinstance(e, _InvalidHeader):

715 raise InvalidHeader(e, request=request)

ReadTimeout: HTTPSConnectionPool(host='www.ncei.noaa.gov', port=443): Read timed out. (read timeout=30)

Next, we can store this data as a Series in Pyleoclim:

ts_co2 = pyleo.Series(

time=co2df["age_gas_calBP"] / 1000,

value=co2df["co2_ppm"],

time_name="Age",

time_unit="kyr BP",

value_name=r"$CO_2$",

value_unit="ppm",

label="EPICA Dome C CO2",

)

We can now plot age vs. CO2 from EPICA Dome C:

ts_co2.plot(color="C1")

Notice that the x-axis is plotted with present-day (0 kyr) on the left and the past (800 kyr) on the right. This is a common practice when plotting paleoclimate time series data.

These changes in CO2 are tracking glacial-interglacial cycles (Ice Ages) over the past 800,000 years. Recall that these Ice Ages occur as a result of changes in the orbital cycles of Earth: eccentricity (100,000 year cycle), obliquity (40,000 year cycle), and precession (21,000 year cycle). Can you observe them in the graph above?

Section 2: Exploring the relationship between δD and atmospheric CO2#

To investigate the relationship between glacial cycles, atmospheric CO2 and temperature, we can compare CO2 to a record of hydrogen isotopic values (δD) of ice cores, which is a proxy for temperature in this case. Remember, when interpreting isotopic measurements of ice cores, a more depleted δD value indicates cooler temperatures, and a more enriched δD value indicates warmer temperatures. This is the opposite relationship we have looked at previously with δ18O, not because we are looking at a different isotope, but because we are not looking at the isotopic composition of ice rather than the isotopic composition of the ocean.

Let’s download the EPICA Dome C δD data, store it as a Series, and plot the data:

# download the data using the url

filename_edc3deuttemp2007 = "edc3deuttemp2007.txt"

url_edc3deuttemp2007 = "https://www.ncei.noaa.gov/pub/data/paleo/icecore/antarctica/epica_domec/edc3deuttemp2007.txt"

data_path = pooch_load(

filelocation=url_edc3deuttemp2007, filename=filename_edc3deuttemp2007

) # open the file

dDdf = pd.read_csv(data_path, skiprows=91, encoding="unicode_escape", sep="\s+")

# remove nan values

dDdf.dropna(inplace=True)

dDdf.head()

---------------------------------------------------------------------------

timeout Traceback (most recent call last)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:468, in HTTPConnectionPool._make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

464 except BaseException as e:

465 # Remove the TypeError from the exception chain in

466 # Python 3 (including for exceptions like SystemExit).

467 # Otherwise it looks like a bug in the code.

--> 468 six.raise_from(e, None)

469 except (SocketTimeout, BaseSSLError, SocketError) as e:

File <string>:3, in raise_from(value, from_value)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:463, in HTTPConnectionPool._make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

462 try:

--> 463 httplib_response = conn.getresponse()

464 except BaseException as e:

465 # Remove the TypeError from the exception chain in

466 # Python 3 (including for exceptions like SystemExit).

467 # Otherwise it looks like a bug in the code.

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/http/client.py:1377, in HTTPConnection.getresponse(self)

1376 try:

-> 1377 response.begin()

1378 except ConnectionError:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/http/client.py:320, in HTTPResponse.begin(self)

319 while True:

--> 320 version, status, reason = self._read_status()

321 if status != CONTINUE:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/http/client.py:281, in HTTPResponse._read_status(self)

280 def _read_status(self):

--> 281 line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

282 if len(line) > _MAXLINE:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/socket.py:716, in SocketIO.readinto(self, b)

715 try:

--> 716 return self._sock.recv_into(b)

717 except timeout:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/ssl.py:1275, in SSLSocket.recv_into(self, buffer, nbytes, flags)

1272 raise ValueError(

1273 "non-zero flags not allowed in calls to recv_into() on %s" %

1274 self.__class__)

-> 1275 return self.read(nbytes, buffer)

1276 else:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/ssl.py:1133, in SSLSocket.read(self, len, buffer)

1132 if buffer is not None:

-> 1133 return self._sslobj.read(len, buffer)

1134 else:

timeout: The read operation timed out

During handling of the above exception, another exception occurred:

ReadTimeoutError Traceback (most recent call last)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/adapters.py:667, in HTTPAdapter.send(self, request, stream, timeout, verify, cert, proxies)

666 try:

--> 667 resp = conn.urlopen(

668 method=request.method,

669 url=url,

670 body=request.body,

671 headers=request.headers,

672 redirect=False,

673 assert_same_host=False,

674 preload_content=False,

675 decode_content=False,

676 retries=self.max_retries,

677 timeout=timeout,

678 chunked=chunked,

679 )

681 except (ProtocolError, OSError) as err:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:802, in HTTPConnectionPool.urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, **response_kw)

800 e = ProtocolError("Connection aborted.", e)

--> 802 retries = retries.increment(

803 method, url, error=e, _pool=self, _stacktrace=sys.exc_info()[2]

804 )

805 retries.sleep()

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/util/retry.py:552, in Retry.increment(self, method, url, response, error, _pool, _stacktrace)

551 if read is False or not self._is_method_retryable(method):

--> 552 raise six.reraise(type(error), error, _stacktrace)

553 elif read is not None:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/packages/six.py:770, in reraise(tp, value, tb)

769 raise value.with_traceback(tb)

--> 770 raise value

771 finally:

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:716, in HTTPConnectionPool.urlopen(self, method, url, body, headers, retries, redirect, assert_same_host, timeout, pool_timeout, release_conn, chunked, body_pos, **response_kw)

715 # Make the request on the httplib connection object.

--> 716 httplib_response = self._make_request(

717 conn,

718 method,

719 url,

720 timeout=timeout_obj,

721 body=body,

722 headers=headers,

723 chunked=chunked,

724 )

726 # If we're going to release the connection in ``finally:``, then

727 # the response doesn't need to know about the connection. Otherwise

728 # it will also try to release it and we'll have a double-release

729 # mess.

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:470, in HTTPConnectionPool._make_request(self, conn, method, url, timeout, chunked, **httplib_request_kw)

469 except (SocketTimeout, BaseSSLError, SocketError) as e:

--> 470 self._raise_timeout(err=e, url=url, timeout_value=read_timeout)

471 raise

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/urllib3/connectionpool.py:358, in HTTPConnectionPool._raise_timeout(self, err, url, timeout_value)

357 if isinstance(err, SocketTimeout):

--> 358 raise ReadTimeoutError(

359 self, url, "Read timed out. (read timeout=%s)" % timeout_value

360 )

362 # See the above comment about EAGAIN in Python 3. In Python 2 we have

363 # to specifically catch it and throw the timeout error

ReadTimeoutError: HTTPSConnectionPool(host='www.ncei.noaa.gov', port=443): Read timed out. (read timeout=30)

During handling of the above exception, another exception occurred:

ReadTimeout Traceback (most recent call last)

Cell In[13], line 4

2 filename_edc3deuttemp2007 = "edc3deuttemp2007.txt"

3 url_edc3deuttemp2007 = "https://www.ncei.noaa.gov/pub/data/paleo/icecore/antarctica/epica_domec/edc3deuttemp2007.txt"

----> 4 data_path = pooch_load(

5 filelocation=url_edc3deuttemp2007, filename=filename_edc3deuttemp2007

6 ) # open the file

8 dDdf = pd.read_csv(data_path, skiprows=91, encoding="unicode_escape", sep="\s+")

9 # remove nan values

Cell In[5], line 10, in pooch_load(filelocation, filename, processor)

8 file = os.path.join(shared_location, filename)

9 else:

---> 10 file = pooch.retrieve(

11 filelocation,

12 known_hash=None,

13 fname=os.path.join(user_temp_cache, filename),

14 processor=processor,

15 )

17 return file

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/pooch/core.py:239, in retrieve(url, known_hash, fname, path, processor, downloader, progressbar)

236 if downloader is None:

237 downloader = choose_downloader(url, progressbar=progressbar)

--> 239 stream_download(url, full_path, known_hash, downloader, pooch=None)

241 if known_hash is None:

242 get_logger().info(

243 "SHA256 hash of downloaded file: %s\n"

244 "Use this value as the 'known_hash' argument of 'pooch.retrieve'"

(...)

247 file_hash(str(full_path)),

248 )

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/pooch/core.py:807, in stream_download(url, fname, known_hash, downloader, pooch, retry_if_failed)

803 try:

804 # Stream the file to a temporary so that we can safely check its

805 # hash before overwriting the original.

806 with temporary_file(path=str(fname.parent)) as tmp:

--> 807 downloader(url, tmp, pooch)

808 hash_matches(tmp, known_hash, strict=True, source=str(fname.name))

809 shutil.move(tmp, str(fname))

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/pooch/downloaders.py:220, in HTTPDownloader.__call__(self, url, output_file, pooch, check_only)

218 # pylint: enable=consider-using-with

219 try:

--> 220 response = requests.get(url, timeout=timeout, **kwargs)

221 response.raise_for_status()

222 content = response.iter_content(chunk_size=self.chunk_size)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/api.py:73, in get(url, params, **kwargs)

62 def get(url, params=None, **kwargs):

63 r"""Sends a GET request.

64

65 :param url: URL for the new :class:`Request` object.

(...)

70 :rtype: requests.Response

71 """

---> 73 return request("get", url, params=params, **kwargs)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/api.py:59, in request(method, url, **kwargs)

55 # By using the 'with' statement we are sure the session is closed, thus we

56 # avoid leaving sockets open which can trigger a ResourceWarning in some

57 # cases, and look like a memory leak in others.

58 with sessions.Session() as session:

---> 59 return session.request(method=method, url=url, **kwargs)

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/sessions.py:589, in Session.request(self, method, url, params, data, headers, cookies, files, auth, timeout, allow_redirects, proxies, hooks, stream, verify, cert, json)

584 send_kwargs = {

585 "timeout": timeout,

586 "allow_redirects": allow_redirects,

587 }

588 send_kwargs.update(settings)

--> 589 resp = self.send(prep, **send_kwargs)

591 return resp

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/sessions.py:703, in Session.send(self, request, **kwargs)

700 start = preferred_clock()

702 # Send the request

--> 703 r = adapter.send(request, **kwargs)

705 # Total elapsed time of the request (approximately)

706 elapsed = preferred_clock() - start

File /opt/hostedtoolcache/Python/3.9.21/x64/lib/python3.9/site-packages/requests/adapters.py:713, in HTTPAdapter.send(self, request, stream, timeout, verify, cert, proxies)

711 raise SSLError(e, request=request)

712 elif isinstance(e, ReadTimeoutError):

--> 713 raise ReadTimeout(e, request=request)

714 elif isinstance(e, _InvalidHeader):

715 raise InvalidHeader(e, request=request)

ReadTimeout: HTTPSConnectionPool(host='www.ncei.noaa.gov', port=443): Read timed out. (read timeout=30)

dDts = pyleo.Series(

time=dDdf["Age"] / 1000,

value=dDdf["Deuterium"],

time_name="Age",

time_unit="kyr BP",

value_name=r"$\delta D$",

value_unit="\u2030",

label=r"EPICA Dome C $\delta D$",

)

dDts.plot()

When we observe the δD data, we see very similar patterns as in the atmospheric CO2 data. To more easily compare the two records, we can plot the two series side by side by putting them into a MultipleSeries object. Since the δD and CO2 values have different units, we can first standardize the series and then plot the data.

# combine series

ms = pyleo.MultipleSeries([dDts, ts_co2])

# standarize series and plot

ms.standardize().plot()

Now we can more easily compare the timing and magnitude of changes in CO2 and δD at EPICA Dome C over the past 800,000 years. During glacial periods, δD was more depleted (cooler temperatures) and atmospheric CO2 was lower. During interglacial periods, δD was more enriched (warmer temperatures) and atmospheric CO2 was higher.

Questions 2: Climate Connection#

Why do δD, CO2 and glacial cycles covary so closely?

Can you identify glacial and interglacial periods? Today, are we in an interglacial or glacial period?

Do the cooling and warming periods of the cycles happen at the same rate?

What climate forcings do you think are driving these cycles?

# to_remove explanation

"""

1. During glacial periods, lower temperatures lead to more oceanic absorption of CO2, reducing atmospheric CO2 levels. During interglacial periods, the reverse happens. This mutual influence creates a feedback loop, resulting in the observed covariance between dD (a proxy for temperature), CO2 and glacial-interglacial cycles.

2. The glacial periods occur when dD is more negative (depleted) and atmospheric CO2 concentrations are lower, whereas interglacial periods occur when dD is more positive (enriched) and atmospheric CO2 concentrations are higher. Based on this, the most recent glacial period was ~20,000 years ago. Today, we are in an interglacial period. However, notice how the atmospheric CO2 concentration rapidly increases towards the present and exceeds the CO2 concentration of any other interglacial period over the past 800,000 years. What might be causing this?

3. The cooling periods tend to be more gradual while warming periods are relatively rapid. This pattern is especially apparent over the past 400,000 years.

4. These glacial-interglacial cycles are primarily driven by variations in Earth’s orbital parameters. These parameters include eccentricity, obliquity, and precession, which vary on timescales of ~100,000 years, ~40,000 years, and ~21,000 years, respectively. In later tutorials, we’ll learn how to identify temporal cyclicity in paleoclimate reconstructions and assess possible forcings with similar timescales of variation.

"""

'\n1. During glacial periods, lower temperatures lead to more oceanic absorption of CO2, reducing atmospheric CO2 levels. During interglacial periods, the reverse happens. This mutual influence creates a feedback loop, resulting in the observed covariance between dD (a proxy for temperature), CO2 and glacial-interglacial cycles.\n2. The glacial periods occur when dD is more negative (depleted) and atmospheric CO2 concentrations are lower, whereas interglacial periods occur when dD is more positive (enriched) and atmospheric CO2 concentrations are higher. Based on this, the most recent glacial period was ~20,000 years ago. Today, we are in an interglacial period. However, notice how the atmospheric CO2 concentration rapidly increases towards the present and exceeds the CO2 concentration of any other interglacial period over the past 800,000 years. What might be causing this?\n3. The cooling periods tend to be more gradual while warming periods are relatively rapid. This pattern is especially apparent over the past 400,000 years.\n4. These glacial-interglacial cycles are primarily driven by variations in Earth’s orbital parameters. These parameters include eccentricity, obliquity, and precession, which vary on timescales of ~100,000 years, ~40,000 years, and ~21,000 years, respectively. In later tutorials, we’ll learn how to identify temporal cyclicity in paleoclimate reconstructions and assess possible forcings with similar timescales of variation.\n\n'

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Questions_2")

Summary#

In this tutorial, we dove into the captivating world of paleoclimatology, focusing on the analysis of hydrogen isotopes (δD) and atmospheric CO2 data from the EPICA Dome C ice core. This involved understanding how δD and δ18O measurements from ice cores can enlighten us about past temperature changes, and how trapped CO2 in these ice cores can help us reconstruct shifts in Earth’s atmospheric composition.

By the end of the tutorial, you should be comfortable with plotting δD and CO2 records from the EPICA Dome C ice core and assessing changes in temperature and atmospheric greenhouse gas concentrations over the past 800,000 years. In the next tutorial, we’ll introduce various paleoclimate data analysis tools.

Resources#

Code for this tutorial is based on an existing notebook from LinkedEarth that explores EPICA Dome C paleoclimate records.

Data from the following sources are used in this tutorial:

Jouzel, J., et al. Orbital and Millennial Antarctic Climate Variability over the Past 800,000 Years, Science (2007). https://doi.org/10.1126/science.1141038.

Lüthi, D., Le Floch, M., Bereiter, B. et al. High-resolution carbon dioxide concentration record 650,000–800,000 years before present. Nature 453, 379–382 (2008). https://doi.org/10.1038/nature06949.

Bereiter, B. et al., Revision of the EPICA Dome C CO2 record from 800 to 600 kyr before present, Geoph. Res. Let. (2014). https://doi.org/10.1002/2014GL061957.